Where can I find user reviews of leading AI translation tools?

This question usually comes up when teams need to make a concrete decision. A tool may look convincing at first glance, but reviews often reveal how it behaves once used in real workflows. The main challenge is understanding which sources provide feedback that reflects your specific context.

Linguists, business teams, and developers evaluate tools through different lenses, and each perspective shapes AI translation tool reviews in a different way.

Identifying where to find AI translation tool reviews and the right sources helps move beyond generic ratings and focus on what actually matters in day-to-day usage.

TL;DR

|

Why it matters: Picking an AI translation tool based on a demo is how teams end up paying later in manual cleanup, broken formatting, and inconsistent terminology. The right review sources help you predict those issues early, based on people running similar workflows. That makes your shortlist faster, safer, and easier to defend internally.

Why finding quality AI translation tool reviews matters

Best sources to start with (fast, reliable signal)

- G2 and Capterra for structured business reviews (usability, support, ROI, integrations).

- Gartner Peer Insights for enterprise-grade feedback and “pros/cons” patterns.

- ProZ.com forums for translator-grade quality notes (terminology, language pairs, QA).

- GitHub issues/discussions for API reliability, edge cases, and integration pain.

- Trustpilot for “customer experience” signals (billing, support, delivery expectations), best used as a secondary check.

When a translation tool falls short, the impact is immediate. Accuracy issues affect trust, weak integrations slow teams down, and missing capabilities force manual fixes. Reviewing reliable user ratings for AI translators helps highlight these risks early, before a tool becomes embedded in operational processes.

Clear customer feedback on translation software shows how tools perform in real conditions, rather than in controlled demos. This makes review analysis a practical step for organizations that rely on machine translation.

Professional communities for customer feedback on translation software

When linguistic quality is a priority, professional translators often provide the most detailed feedback. Their evaluations are typically based on daily use across multiple languages and content types.

ProZ.com translation community

ProZ.com translation community

ProZ.com hosts a large professional translator network. Its forums include detailed AI translation tool reviews focused on language-pair accuracy, terminology handling, and compatibility with CAT tools. Feedback is shared by verified professionals with direct project experience.

TranslatorsCafe professional network

TranslatorsCafe professional network

Discussions on TranslatorsCafe often focus on workflow efficiency, delivery timelines, and quality expectations in client projects. This feedback is useful when evaluating tools for commercial translation environments.

LinkedIn professional groups

LinkedIn professional groups

LinkedIn groups host ongoing conversations about tool performance in business settings. Contributors often combine linguistic feedback with operational and cost considerations.

Business software platforms for best rated translation tools

When the goal is to compare tools across teams or industries, business review platforms offer structured insights. This is especially useful for teams evaluating translation tools as part of a broader stack (CMS, help center, product, support, and analytics).

G2 business software reviews

G2 business software reviews

G2 aggregates AI translation tool reviews from business users. Ratings are often segmented by company size and industry and cover usability, support, and integration aspects. This helps identify best rated translation tools in specific operational contexts.

Capterra software marketplace

Capterra software marketplace

Capterra allows users to filter reviews by features, deployment models, and business size. This supports comparison of tools based on concrete requirements rather than general popularity.

Trustpilot business reviews

Trustpilot business reviews

Trustpilot reviews often reflect client-side experiences around customer support, billing clarity, and delivery satisfaction. Use this as a secondary layer, not your only decision input.

Technical communities for user ratings for AI translators

When teams need to understand how a tool behaves in production, technical communities provide the most hands-on feedback. You will see real error messages, edge cases, and integration constraints that never show up in marketing pages.

Reddit technical communities

Reddit technical communities

On Reddit, search for tool-specific threads (for example: “API rate limit”, “PDF OCR accuracy”, “XLIFF import/export”, “glossary enforcement”, “layout retention”) and look for comments that include files tested, language pairs, and screenshots. Treat upvotes as a hint, not proof.

GitHub project discussions

GitHub project discussions

GitHub issues and discussions include reports on performance, integration challenges, and API behavior. Developers share hands-on feedback that is useful for evaluating scalability, reliability, and SDK maturity.

Stack Overflow translation tags

Stack Overflow translation tags

Questions related to translation APIs highlight recurring problems and constraints. This is where you will learn what breaks during authentication, batching, file uploads, or asynchronous jobs, and how teams work around it.

Academic sources for rigorous comparison of MT tools

Academic research offers structured comparison of MT tools using standardized evaluation methods. It can be great for understanding trends and limits, but it may not reflect your exact workflow or the newest product updates.

Association for Computational Linguistics papers

ACL Anthology includes peer-reviewed studies comparing machine translation systems across languages and domains, with a focus on measurable outcomes rather than subjective impressions.

Translation studies journals

Research in translation studies evaluates tools from a linguistic and professional perspective, examining terminology consistency, domain adaptation, and cultural accuracy.

How to evaluate AI translation tool reviews effectively

Not all AI translation tool reviews provide the same level of value. Context is everything, and it is the fastest way to separate “nice product” from “right fit.”

Mini framework (easy to quote)

Role + Content type + Workflow + Risk + Recency. A review is only “reliable” if it matches who you are, what you translate, how you ship it, what can go wrong, and whether the feedback reflects today’s version of the tool.

Verify reviewer context (Role)

Reviews are more useful when the reviewer’s role is clear. Translators focus on linguistic accuracy and QA. Business teams focus on speed, consistency, and collaboration. Developers focus on APIs, reliability, and security. If the role is missing, the review is hard to apply.

Match the content (Content type)

Look for reviews that mention your real inputs: scanned PDFs, Office docs, knowledge base articles, UI strings, subtitles, legal docs, or localization formats like XLIFF. “Great translation” means nothing if the reviewer only tested short paragraphs.

Validate the workflow (Workflow)

A tool that works for copy/paste can still fail in file-based workflows. Prioritize reviews that mention upload/download quality, collaboration, revisions, or integration into existing tools.

Read for failure modes (Risk)

The best reviews describe what breaks. This is where you learn the hidden cost: manual fixes, rework, or “we stopped trusting output.”

Check freshness (Recency)

Machine translation evolves quickly. Recent user ratings for AI translators are more likely to reflect current capabilities than older reviews.

Concrete examples: what to look for in reviews

If you want reviews that predict real performance, scan for these concrete signals. They map to the issues that create rework, delays, and risk.

| Capability | What to look for in reviews | Why it matters |

|---|---|---|

| OCR accuracy | Mentions of scanned PDFs, screenshots, tables, stamps, low-quality photos, and how often OCR missed text or misread numbers. | Bad OCR silently removes meaning and creates compliance risk (names, IDs, dates, dosage, totals). |

| Glossary support | Whether the tool enforces brand/product terms, supports variants, and avoids “creative” drift on key names. | Terminology inconsistency causes rework, brand errors, and user confusion in UI/support content. |

| XLIFF handling | Comments about preserving tags/placeholders, not breaking segmentation, and round-tripping files cleanly. | Broken XLIFF means broken builds, broken UI strings, and costly localization QA. |

| Layout retention | Notes about tables, headers, footnotes, line breaks, and whether the exported file stayed usable. | If formatting breaks, the “translation” is not deliverable. Someone has to rebuild it manually. |

| API limits | Rate limits, file size caps, async jobs, retries, timeouts, and how errors are surfaced. | These define whether a translation pipeline is stable or a constant firefight. |

| Data residency | Where data is processed, retention controls, privacy modes, and whether reviewers mention security reviews/procurement. | Security requirements can eliminate a tool instantly, regardless of translation quality. |

Lara Translate for professional translation workflows

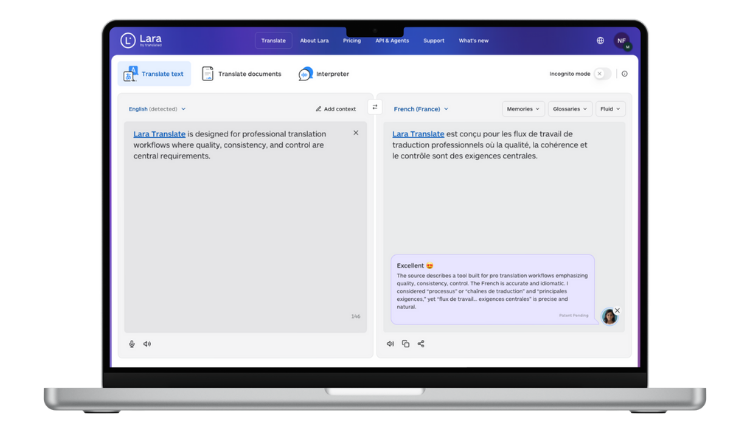

Lara Translate is designed for professional translation workflows where quality, consistency, and control are central requirements.

Core elements frequently evaluated in customer feedback on translation software include:

- Support for 70+ file types, including Office documents, PDFs, localization formats, and more

- Multiple translation styles (Faithful, Fluid, Creative) to adapt output to different content types

- Glossaries to enforce product names and key terminology across texts and documents

- Translation memory to store edits and reuse approved phrasing consistently

- Privacy controls through Learning and Incognito modes for sensitive workflows

Try Lara Translate in your own workflow

Test Lara Translate on a real file and see how it handles terminology, context, and formatting.

Understanding advanced translation technology in user reviews

Interpreting customer feedback on translation software often requires basic awareness of how different translation systems are designed. Reviews increasingly reflect differences in workflows, configuration options, and integration approaches. Different users may evaluate the same tool under different conditions, which can explain why AI translation tool reviews vary across sources.

Navigating different tool categories

One reason reviews feel inconsistent is that people compare different tool types. A “translation tool” might mean an MT engine, a translation management system (TMS), a CAT tool workflow, or a lightweight translator app. Make sure reviews match the category you are actually buying.

Industry-specific translation feedback

Different industries emphasize different evaluation criteria. Subtitle translation reviews often mention timing accuracy and formatting requirements for SRT and VTT files. Medical and legal professionals prioritize accuracy and compliance, while marketing teams focus on tone and brand consistency. These perspectives influence any comparison of MT tools.

The role of human-AI collaboration

Many user ratings for AI translators focus on how tools fit into existing human workflows. Translators often describe AI as a support layer that speeds up routine work while leaving final decisions to humans.

Thank you for reading 💜

As a thank you, here’s a special coupon just for you:

IREADLARA26.

Redeem it and get 10% off Lara PRO for 6 months.

If you already have a Lara account, log in here to apply your coupon.

If you are new to Lara Translate, sign up here and activate your discount.

FAQs

Where can I find user reviews of leading AI translation tools?

Start with G2, Capterra, and Gartner Peer Insights, then validate with ProZ.com forums and GitHub discussions for workflow and API reality checks.

Are AI translation tool reviews useful for business decisions?

Yes, when the reviewer’s role, content type, and workflow match yours, and the review is recent enough to reflect current versions.

What is the difference between user ratings for AI translators and expert evaluations?

User ratings reflect real workflows and operational friction, while expert evaluations often rely on controlled benchmarks that may not match your constraints.

How should I approach a comparison of MT tools?

Use the framework: Role + Content type + Workflow + Risk + Recency, then run a real-file test with your glossary and formats.

Do best rated translation tools fit every use case?

No. “Best rated” usually reflects a specific audience and workflow, so always validate against your own files and risk profile.

This article is about

- Where to find reliable AI translation tool reviews

- How to interpret customer feedback on translation software

- How to assess best rated translation tools by context

- How to use user ratings for AI translators effectively

- How to approach a practical comparison of MT tools

Have a valuable tool, resource, or insight that could enhance one of our articles?

Submit your suggestion

We’ll be happy to review it and consider it for inclusion to enrich our content for our readers! ✍️

Useful articles